Guardsquare Achieves MAS Advocate Status

We are very happy to announce that Guardsquare has officially been granted the MAS Advocate status, the highest recognition possible within the OWASP Mobile Application Security (MAS) project.

This status isn't handed out lightly. It's reserved for organizations that have demonstrated consistent, high-impact contributions over a sustained period, dedicating not just technical resources but genuine commitment and passion to the OWASP MAS project, going beyond occasional contributions, as outlined in our official guidelines. The path to MAS Advocate demands at least six months of proven, impactful support, and, in reality, often much longer depending on the scope and depth of contributions.

Guardsquare's Commitment

Since their application on February 7, 2023, Guardsquare has increasingly met our expectations and exceeded them on many occasions. While the MAS Advocate process requires a minimum of six months of consistent engagement, their journey extended well beyond that, reflecting the depth, consistency, and impact of their contributions.

Some highlights about Guardsquare's involvement include:

- Active participation in the MAS Task Force: Guardsquare has been a consistent presence in our monthly calls, bringing ideas, asking questions, and proactively pushing for core initiatives.

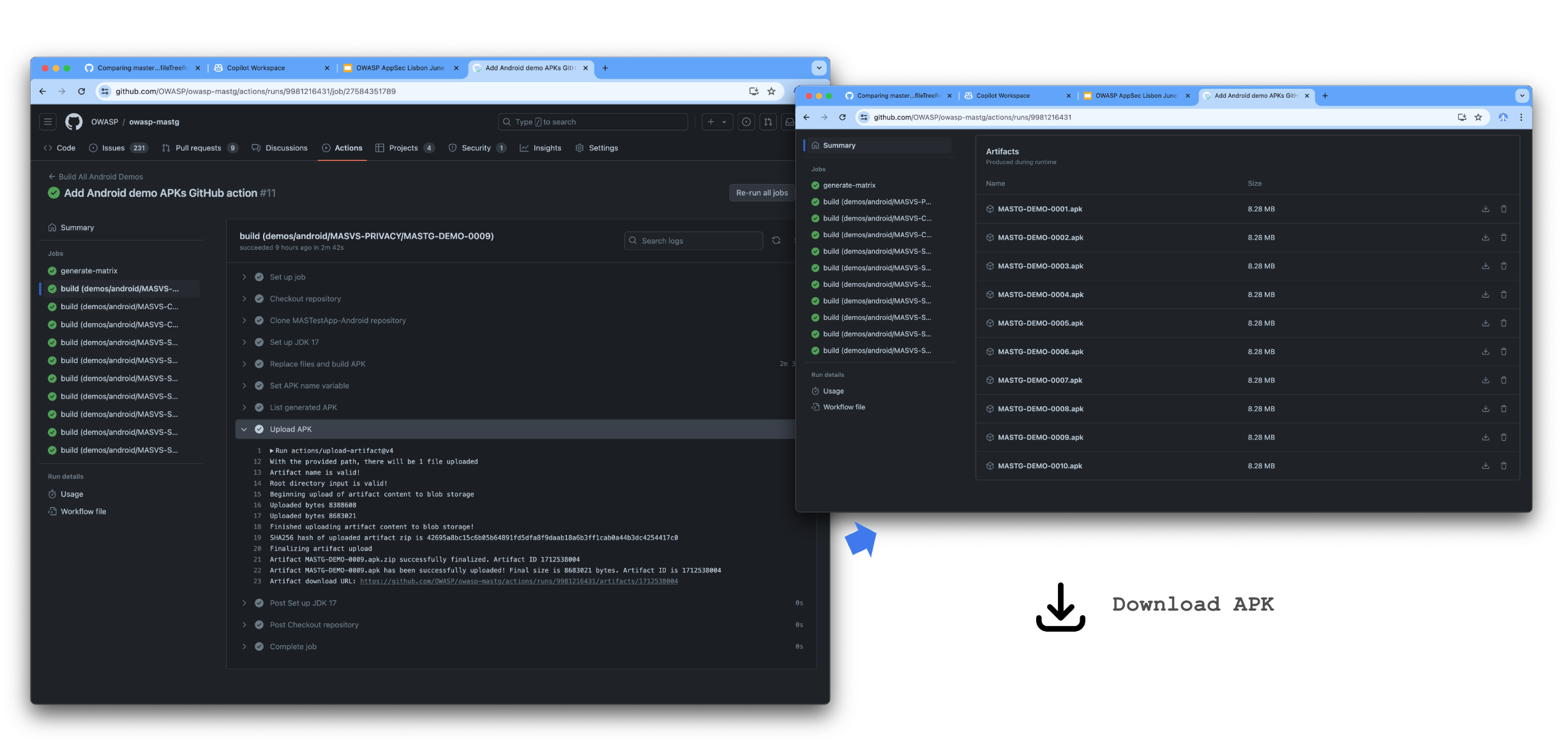

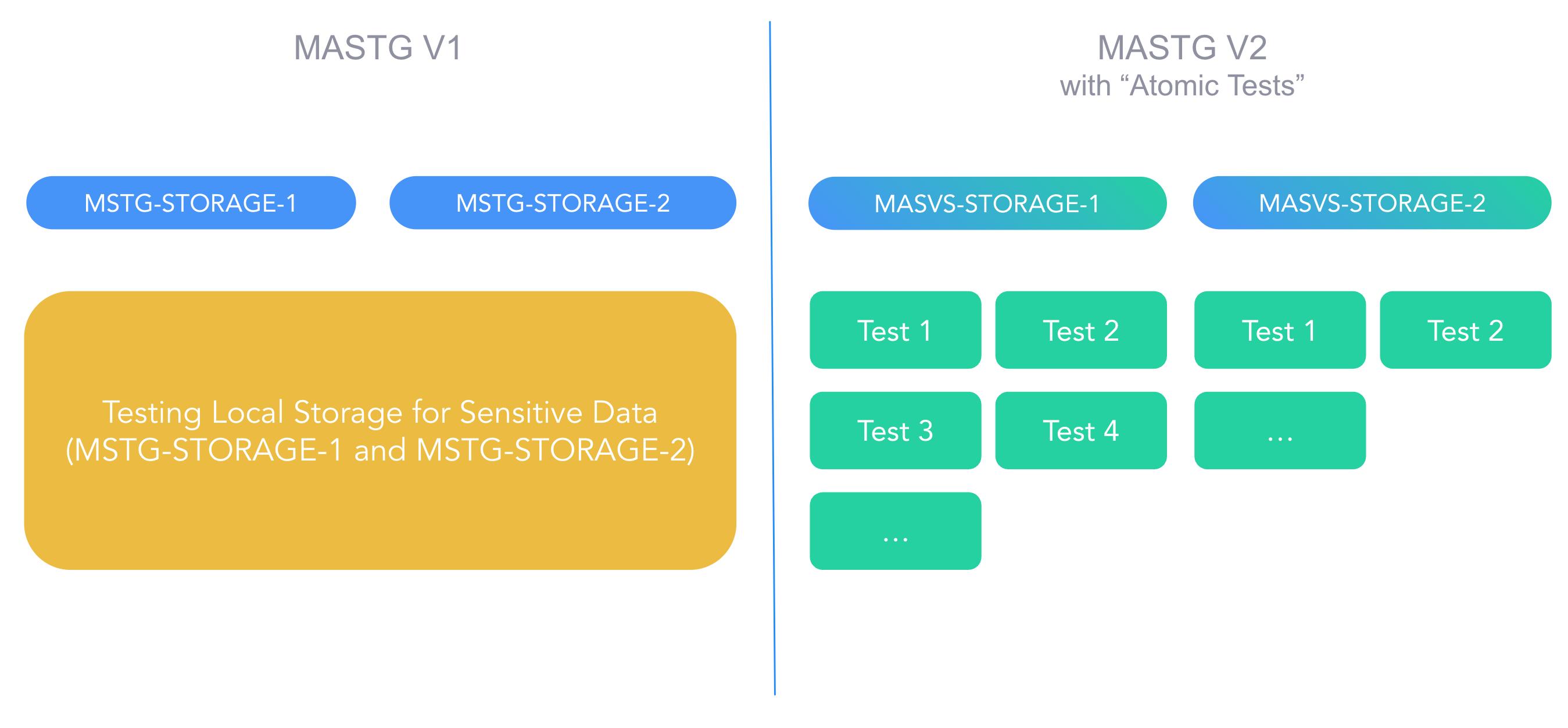

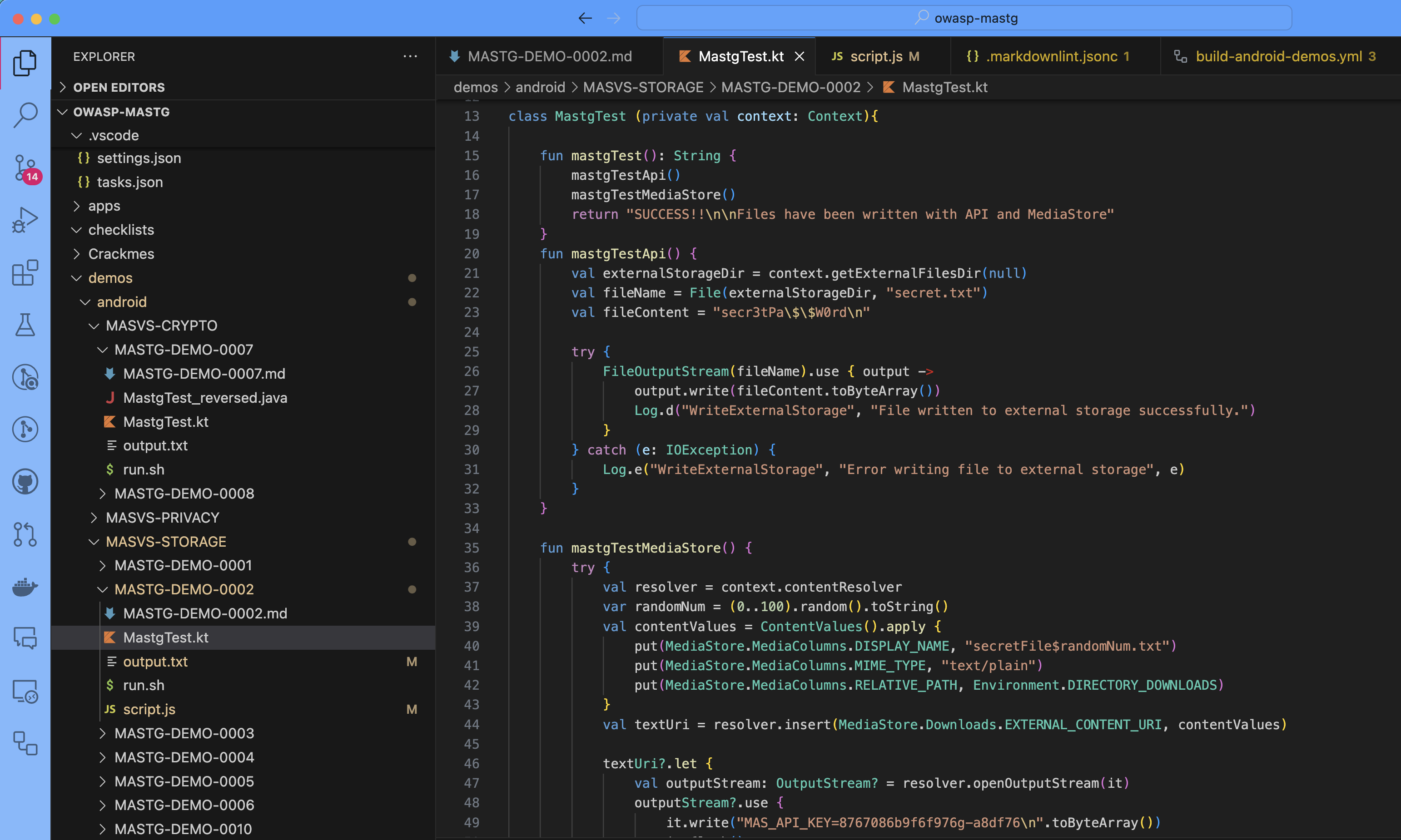

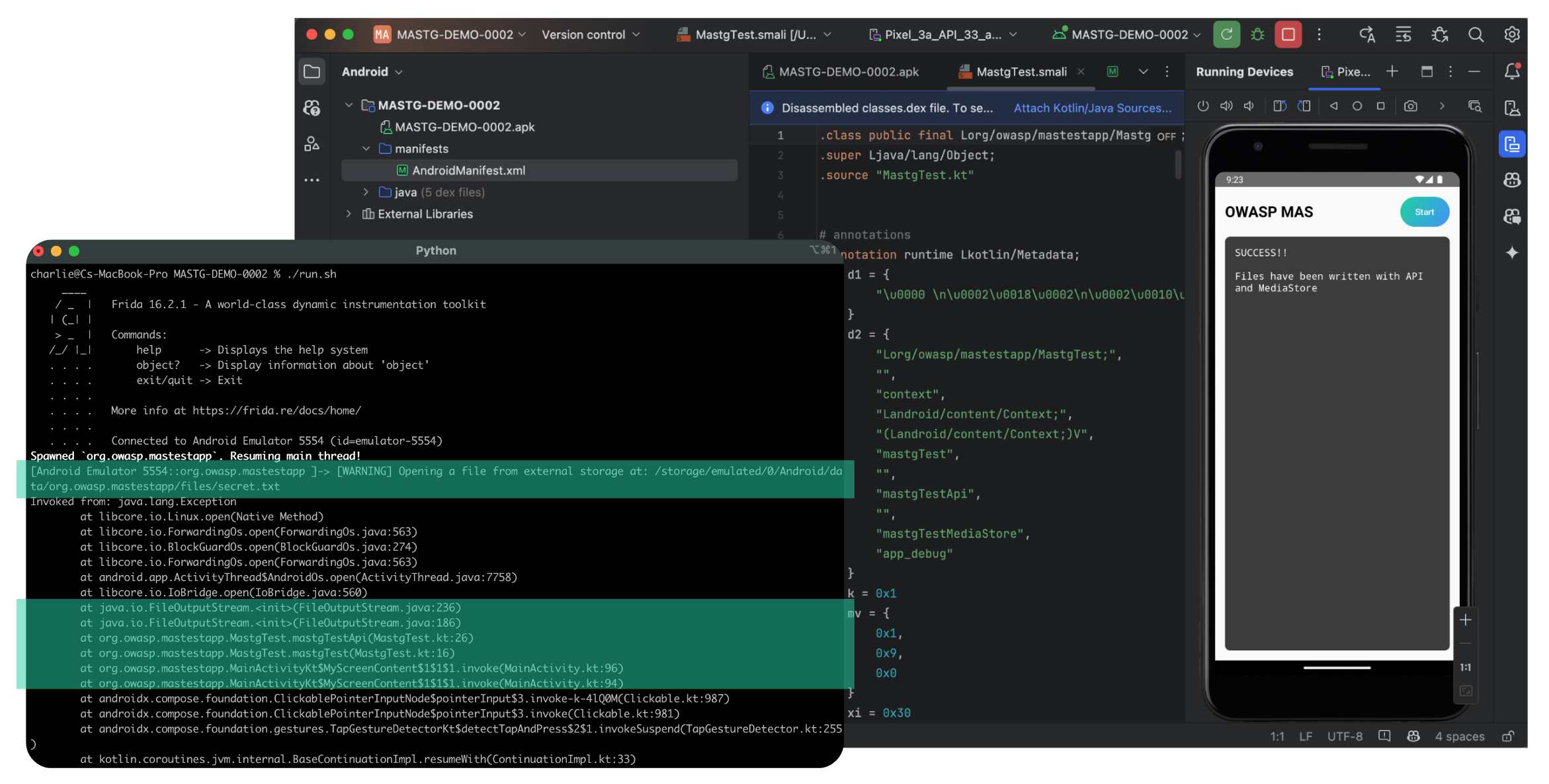

- Consistent, high-value contributions: Including numerous pull requests, many focused on porting v1 to v2 tests, always with complete demos and documentation.

- Peer reviews and thought leadership: Providing in-depth, actionable feedback on pull requests and issues, helping maintain the quality and integrity of the MASTG and the ambitious refactoring to v2.

Their involvement culminated in a major milestone during the OWASP Project Summit in November 2024. This five-day event brought together mobile security experts from around the globe. Among the ~40 pull requests created during the summit, the majority were contributed by Guardsquare's team: Dennis Titze, Jan Seredynski, Nuno Antunes, and Pascal Jungblut.

Why They Committed, and Why It Matters

We asked the Guardsquare team why they decided to commit to OWASP MAS. Here's what they said:

"We recognized that typical standards for application security are lacking the precision and nuance that is relevant to mobile application architectures. We felt the OWASP MAS project was headed in the right direction by providing much more specific and actionable guidance for mobile development teams on how to secure their applications." — Ryan Lloyd

A Win-Win Situation

Their contributions have not only benefited the OWASP MAS project and its community but have also had a direct impact on Guardsquare's own team and offerings:

"Our participation has raised awareness about mobile app security, something that's still too often an afterthought. Through a standards-based approach, we're helping to bring vital security topics to the forefront, which in turn drives more interest in our solutions." — Ryan Lloyd

Beyond the organizational benefits, participation in MAS has been a rewarding experience for the individual contributors:

"This project really expanded my perspective on security. I used to focus mainly on reverse engineering and resilience, but this effort pushed me into new areas, like the MASVS-STORAGE category, which deepened my understanding of how mobile apps handle sensitive data." — Jan Seredynski

"The project gave me the opportunity to connect with people from diverse backgrounds, sparking dynamic conversations about secure implementation practices. It's been very rewarding to see the standards evolve as a result of these collaborations." — Dennis Titze

A Model for Others to Follow

Guardsquare's journey is a blueprint for how companies can engage meaningfully with open security standards. Their commitment, ongoing into 2025 and beyond, sets a powerful example.

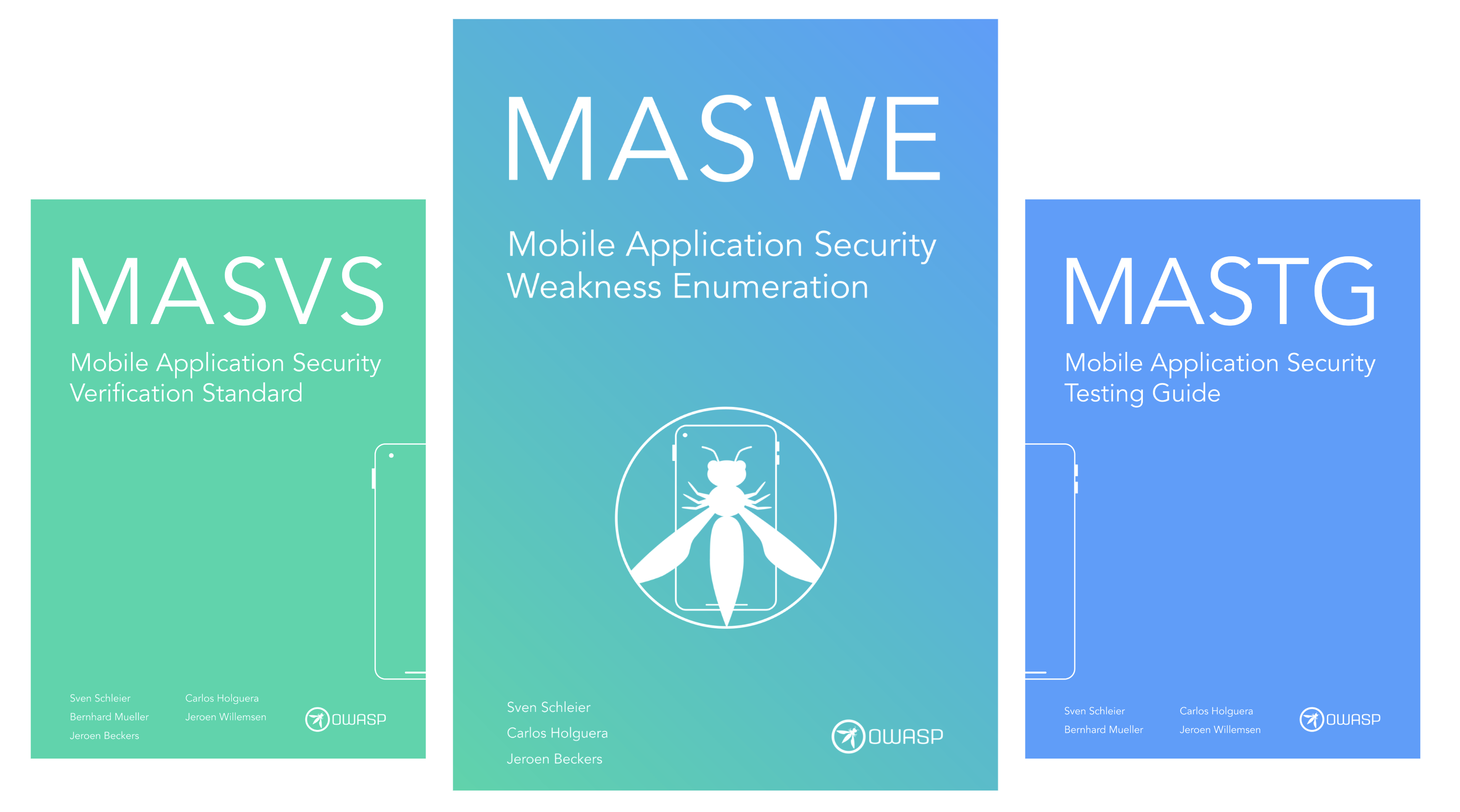

"The mobile app security landscape benefits enormously from diverse perspectives. By expanding the base of contribution, we can offer more depth and breadth in the resources we're providing to the community, and reach our goal of a complete MASVS/MASTG refactor more quickly." — Ryan Lloyd

We hope others follow their lead. Their story shows what's possible when a company commits time, talent, and heart to improving the state of mobile application security.

Ready to Join?

If your organization is passionate about mobile security and ready to make a real impact, consider joining the MAS Task Force. Whether you're contributing tests, reviewing PRs, or helping shape the next generation of standards, there's a place for you.

You can read more about the MAS Advocate status and our other contributors on our website.

Congratulations once again to Guardsquare, we're proud to have you onboard as a MAS Advocate!

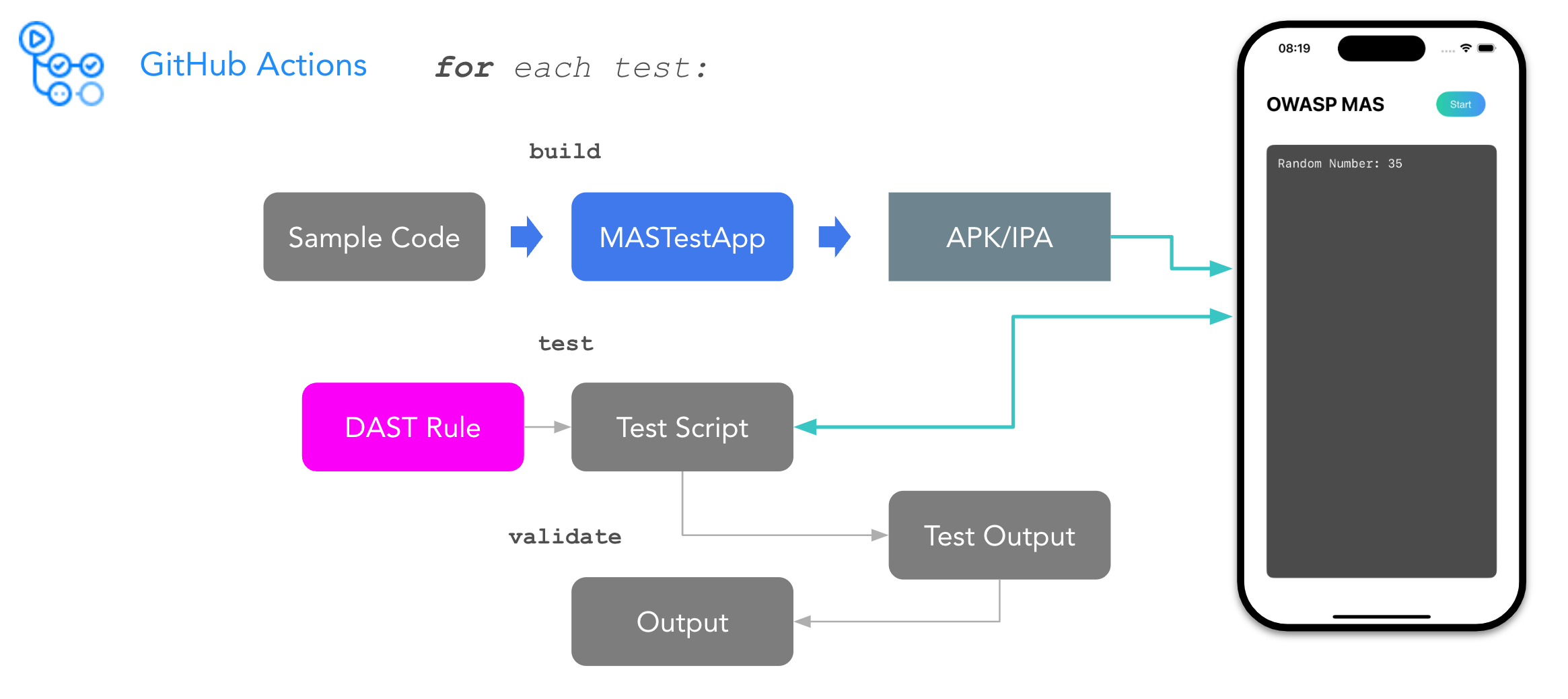

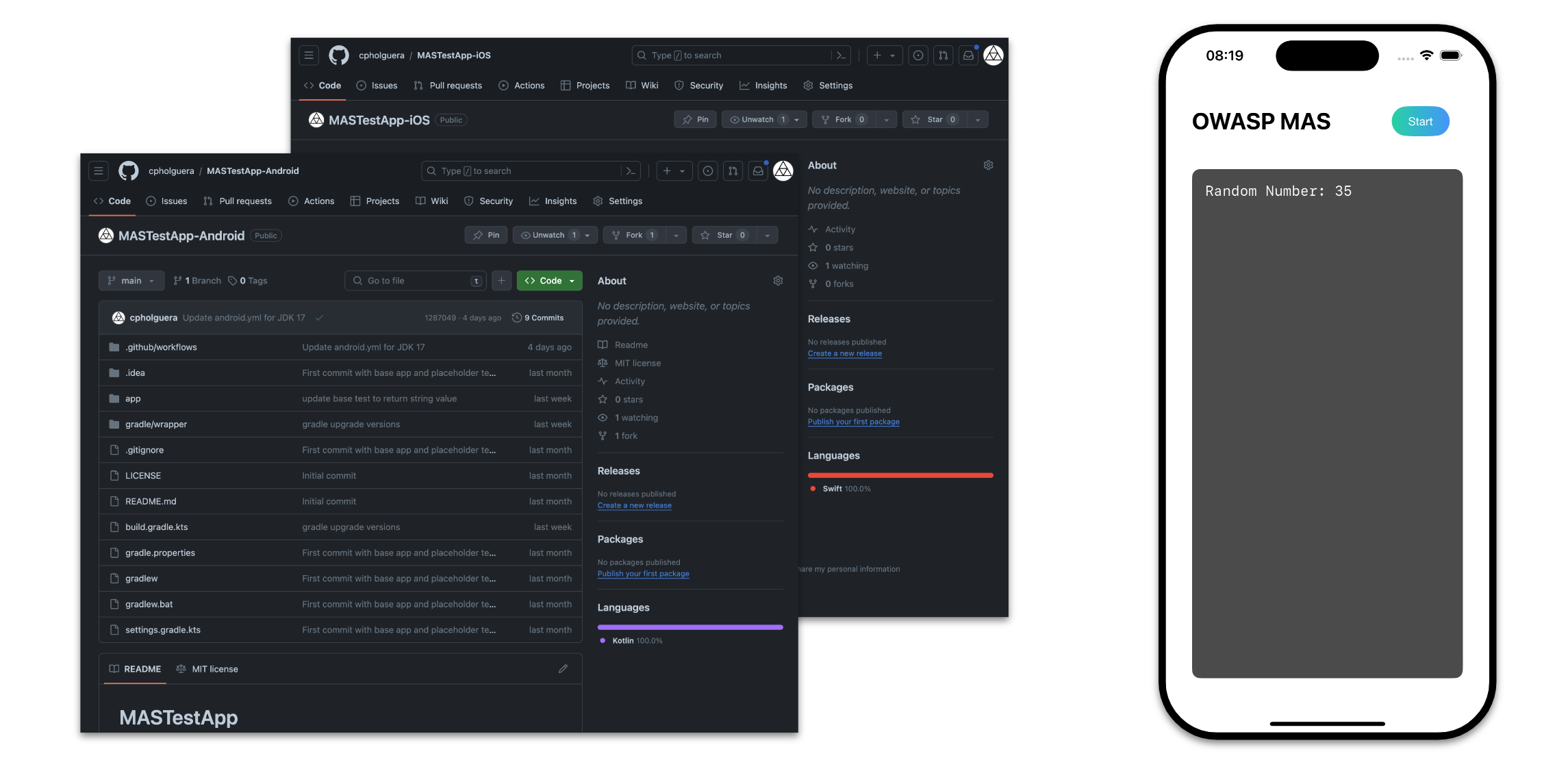

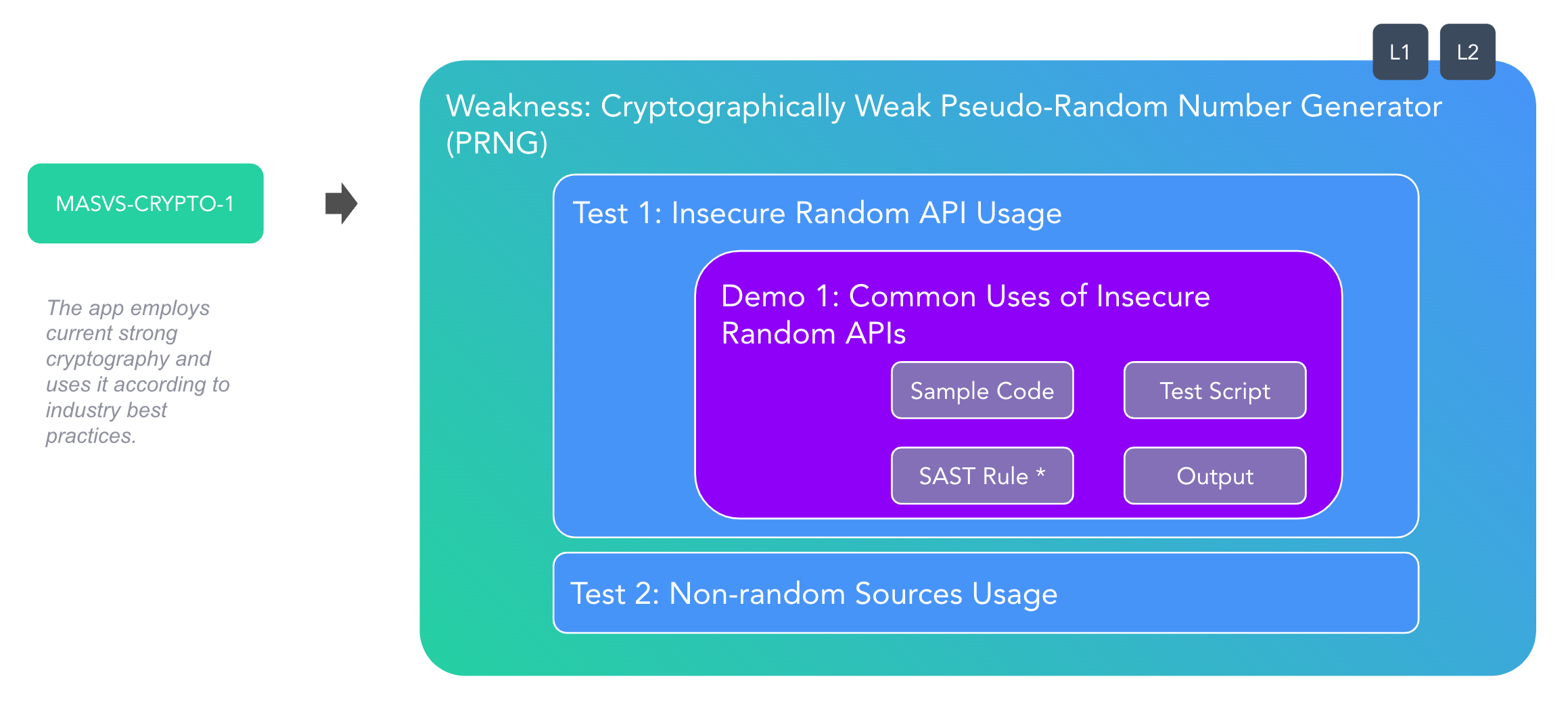

These demos can also be used as experimental playgrounds to improve your skills and practice with different cases as you study mobile app security with the MASTG. For example, you can try to reverse engineer the app and see if you're able to find the same issues as the demo or you can try to fix the issues and see if you can validate the fix.

These demos can also be used as experimental playgrounds to improve your skills and practice with different cases as you study mobile app security with the MASTG. For example, you can try to reverse engineer the app and see if you're able to find the same issues as the demo or you can try to fix the issues and see if you can validate the fix.