Introducing the new Mobile App Security Weakness Enumeration (MASWE)

The OWASP MAS project continues to lead the way in mobile application security, providing robust and up-to-date resources for developers and security professionals alike. Our team has been working diligently with the MAS community and industry to refactor the Mobile Application Security Verification Standard (MASVS) and the Mobile Application Security Testing Guide (MASTG). In this blog post, we'll walk you through our latest addition to the MAS project: the brand new Mobile App Security Weakness Enumeration (MASWE).

Refactoring the MASTG¶

We began the refactoring process in 2021, focusing first on the MASVS and then on the MASTG. Our primary goal was to break the MASTG v1 into modular components, including tests, techniques, tools, and applications.

This modular approach allows us to maintain and update each component independently, ensuring that the MASTG remains current and relevant. For example, in our previous structure, the MASTG consisted of large test cases within a single markdown file. This was not only difficult to maintain but also made it challenging to reference specific tests; and it was impossible to have metadata for each test.

The new structure divides tests into individual pages (Markdown files with metadata), each with its own ID (MASTG-TEST-****) and links to relevant techniques (MASTG-TECH-****) and tools (MASTG-TOOL-****). This encapsulation ensures that each test is easily referenced and promotes reusability across all MAS components. For example, you can open a test and see what tools and techniques are being used, and soon you'll be able to do the same in reverse: open a tool or technique and see all the tests that use it. This deep cross-referencing can be extremely powerful when exploring the MASTG.

Introducing MASWE¶

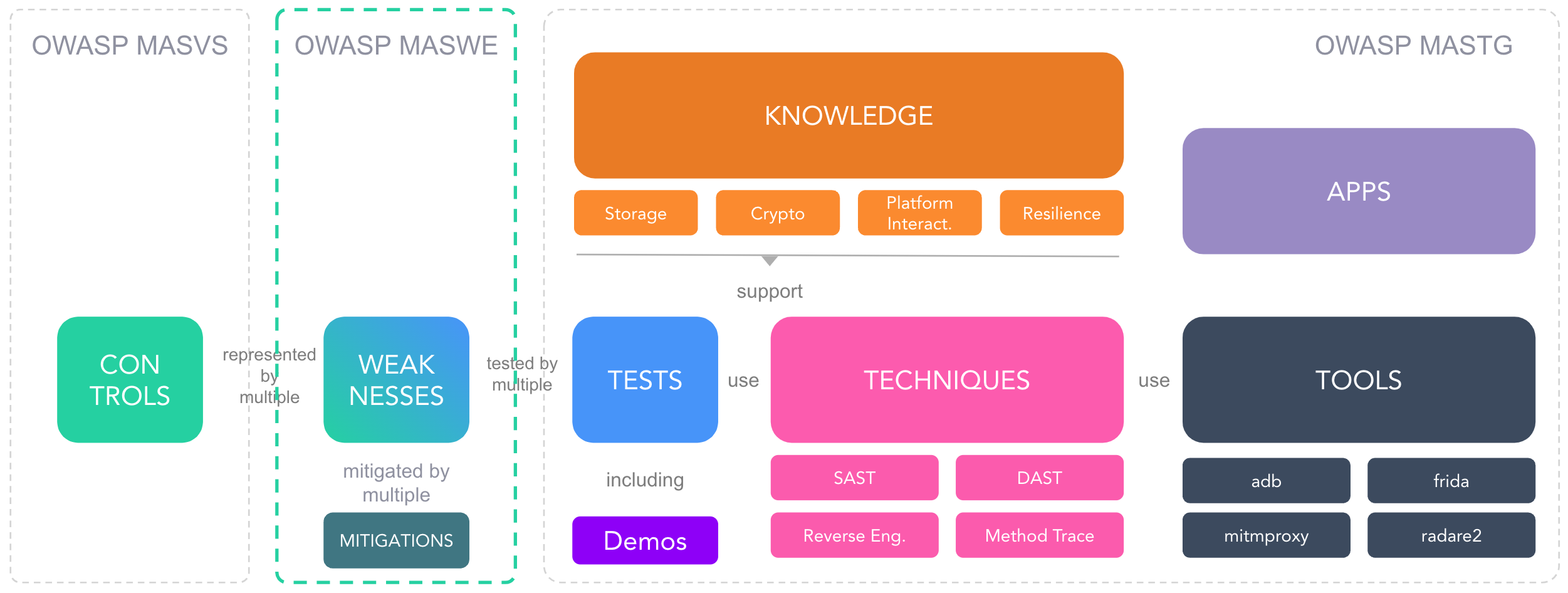

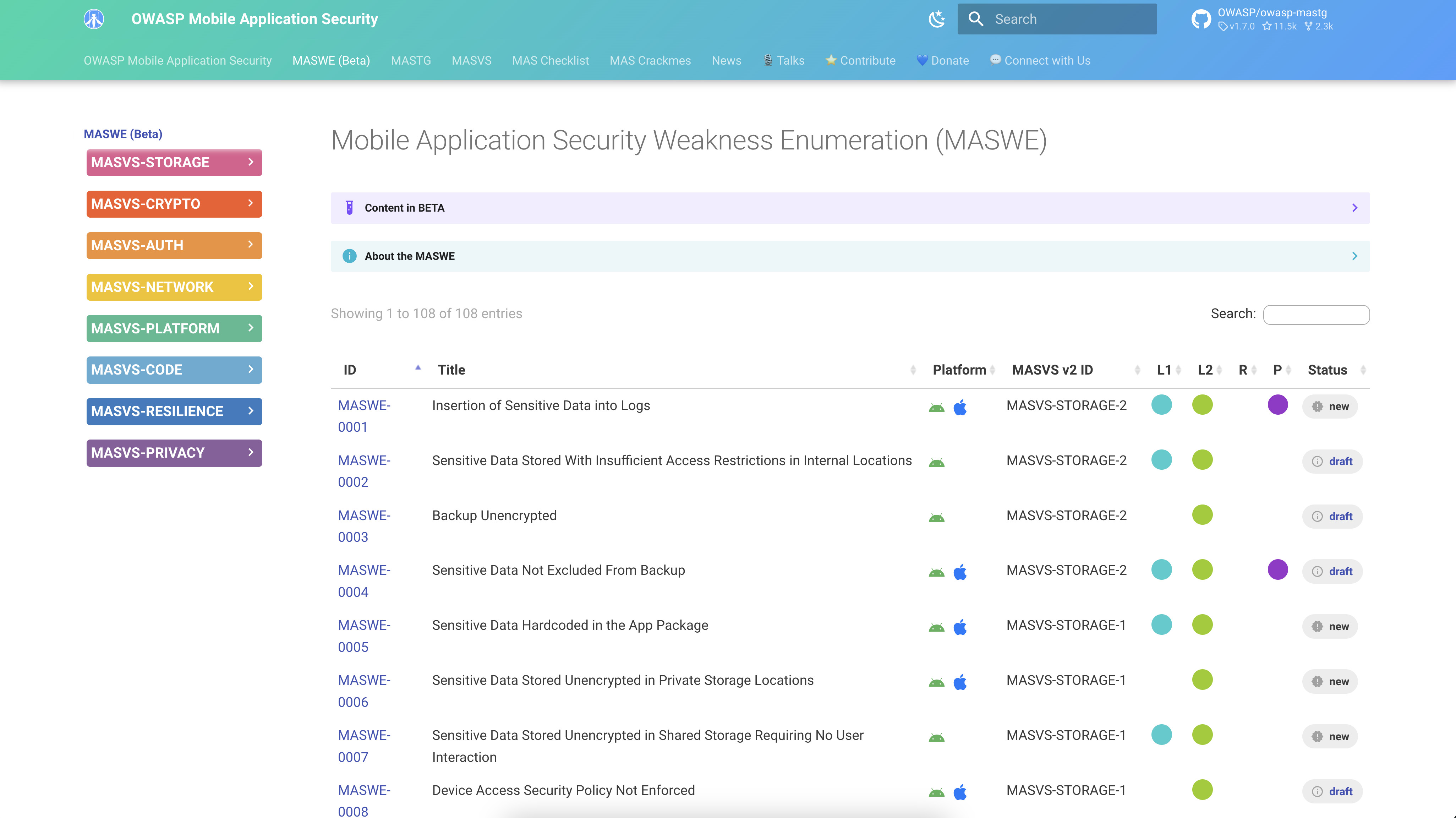

A significant addition to our project is the introduction of MASWE, designed to fill the gap between high-level MASVS controls and low-level MASTG tests. The MASWE identifies specific weaknesses in mobile applications, similar to Common Weakness Enumerations (CWEs) in the broader software security industry. This new layer provides a detailed description of each weakness, bridging the conceptual gap and making the testing process more coherent.

Now MASVS, MASWE and MASTG are all seamlessly connected. We start with the high-level requirements, zoom in on the specific weaknesses, and then go low-level to the tests and hands-on with the demos. Here's how it works:

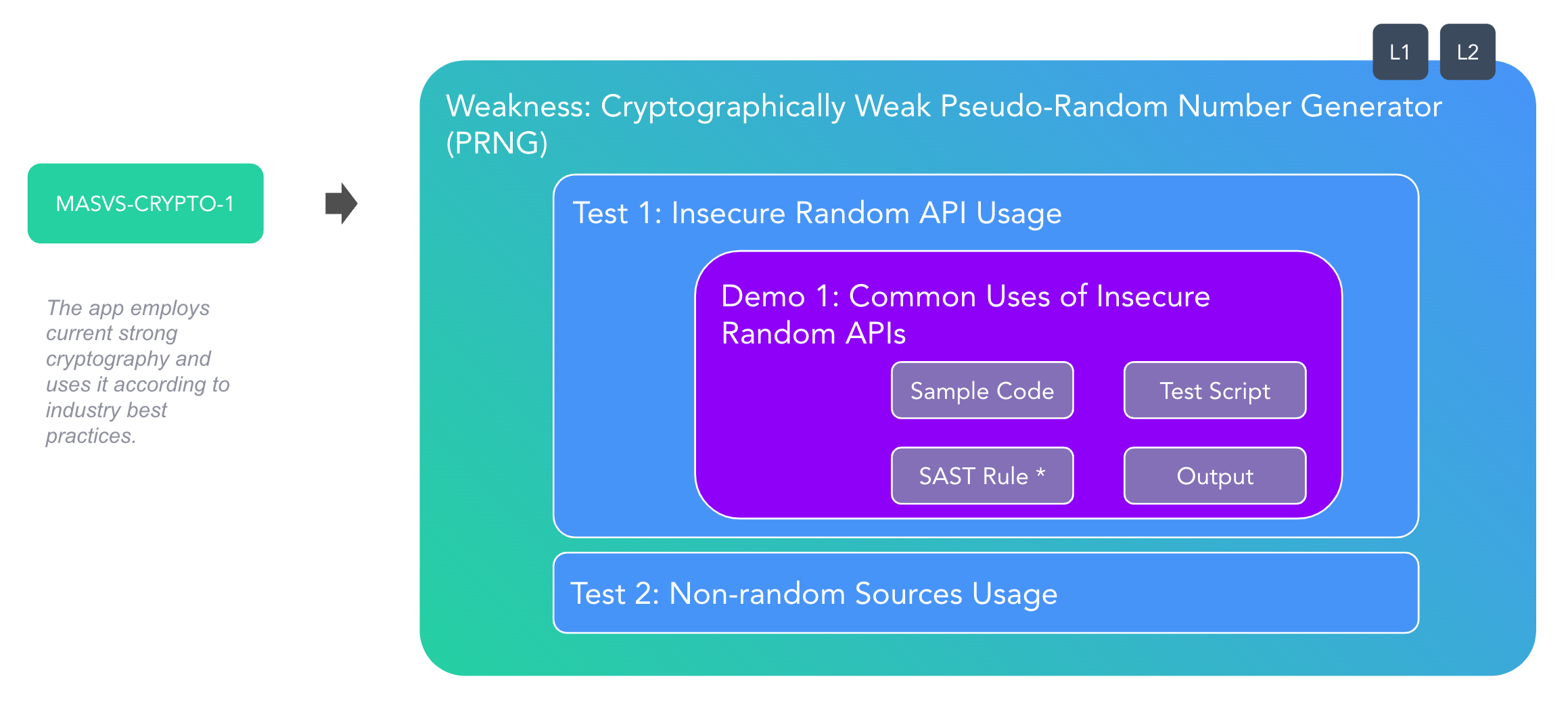

- MASVS Controls: High-level platform-agnostic requirements.

For example, "The app employs current cryptography and uses it according to best practices." (MASVS-CRYPTO-1).

- MASWE Weaknesses: Specific weaknesses, typically also platform-agnostic, related to the controls.

For example, "use of weak pseudo-random number generation" (MASWE-0027).

- MASTG Tests: Each weakness is evaluated by executing tests that guide the tester in identifying and mitigating the issues using various tools and techniques on each mobile platform.

For example, testing for "insecure random API usage on Android" (MASTG-TEST-0204).

- MASTG Demos: Practical demonstrations that include working code samples and test scripts to ensure reproducibility and reliability.

For example, a sample using Java's

Random()instead ofSecureRandom()(MASTG-DEMO-0007).

Practical Applications and Demos¶

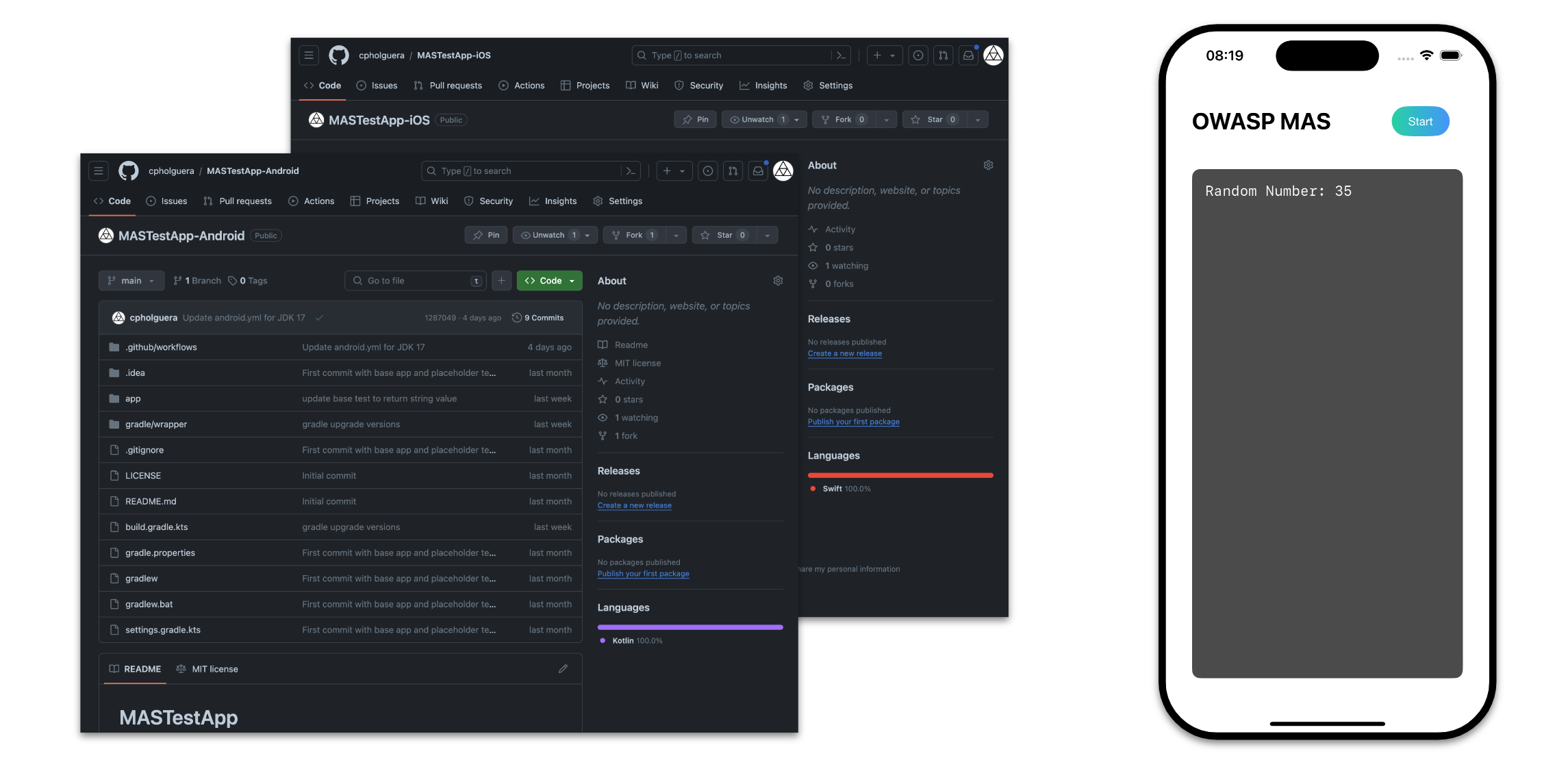

To ensure our guidelines are practical and reliable, we've developed new MAS Test Apps for both Android and iOS.

These simple, skeleton applications are designed to embed code samples directly, allowing users to validate and experiment with the provided demos. This approach ensures that all code samples are functional and up-to-date, fostering a hands-on learning experience.

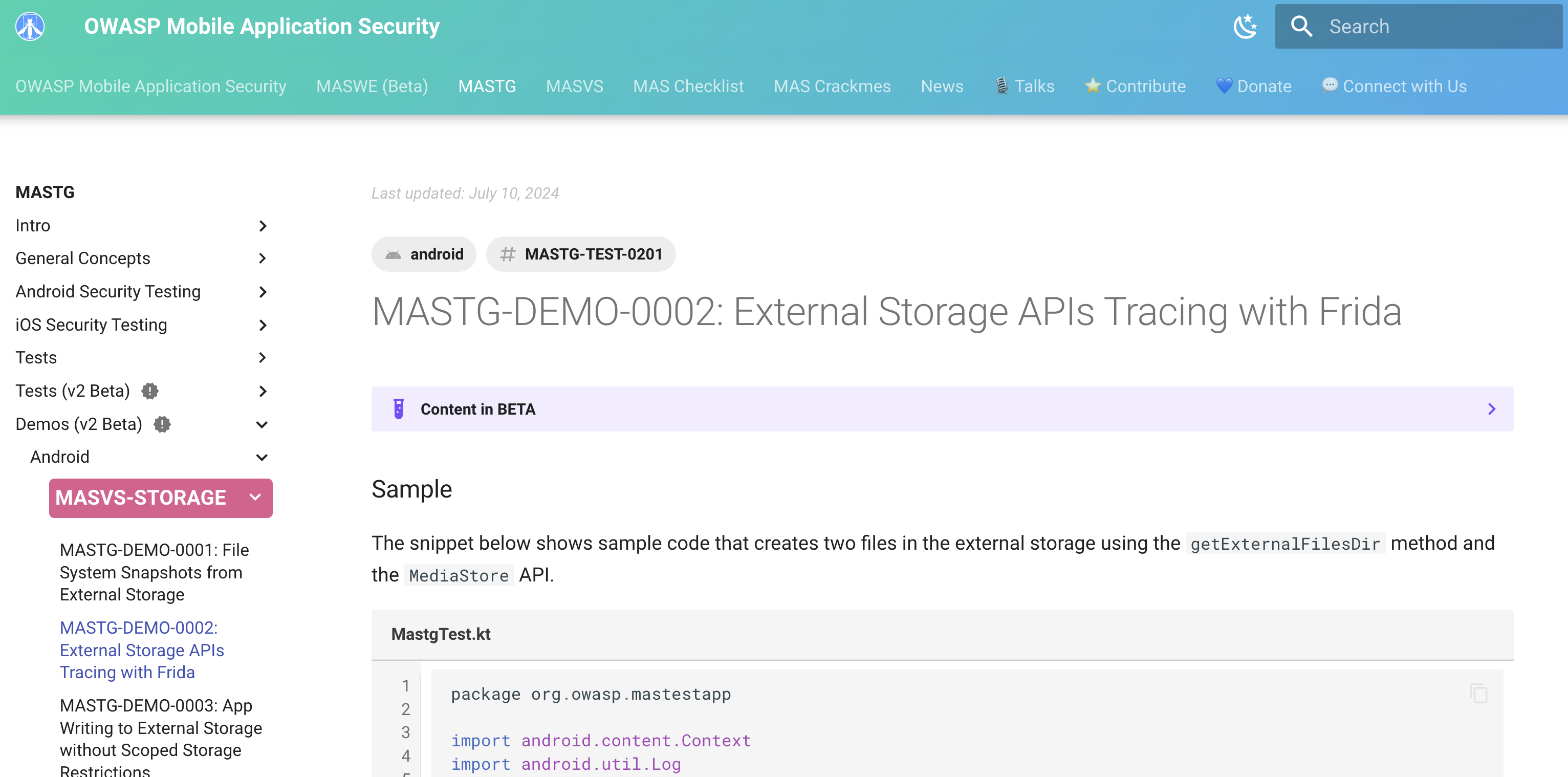

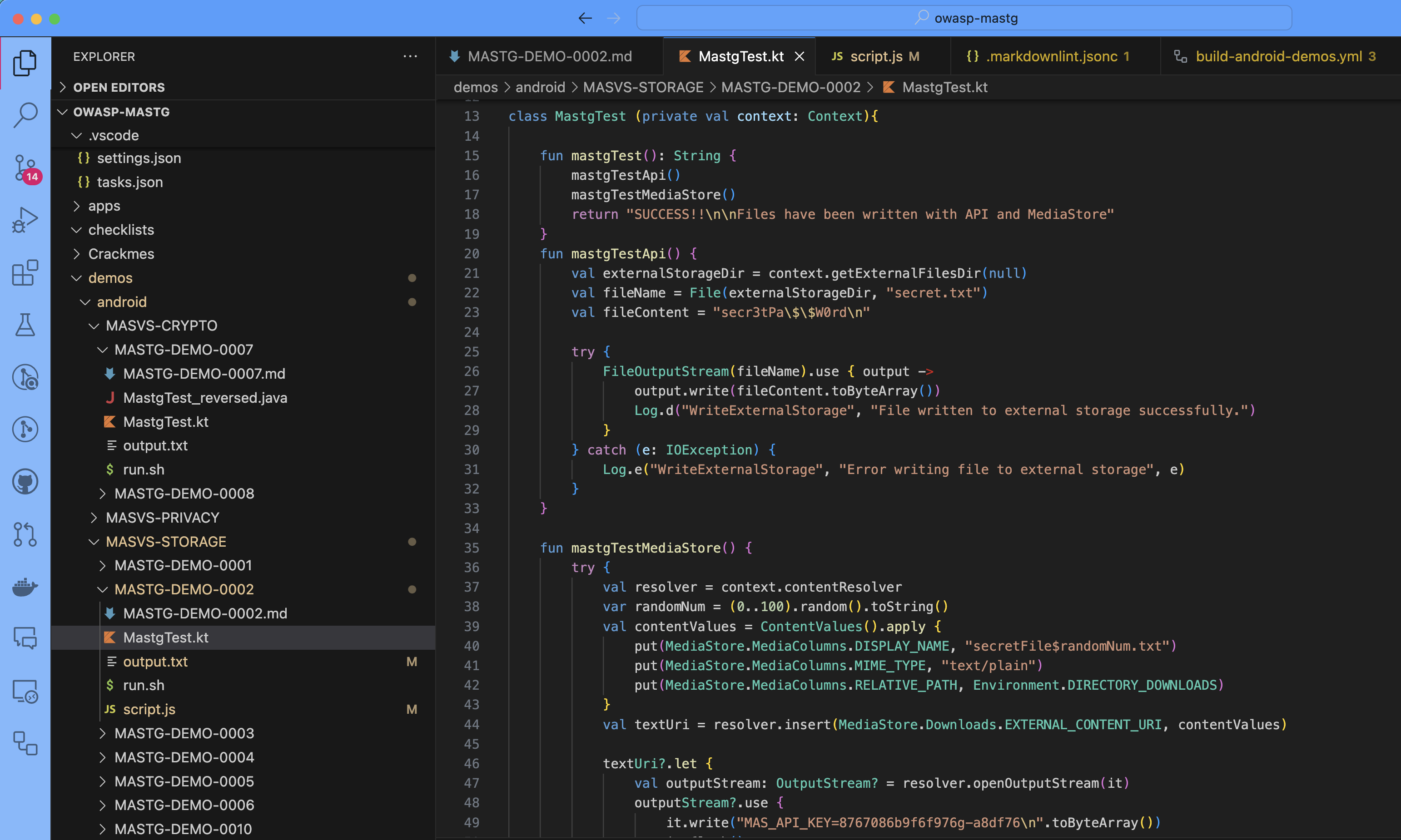

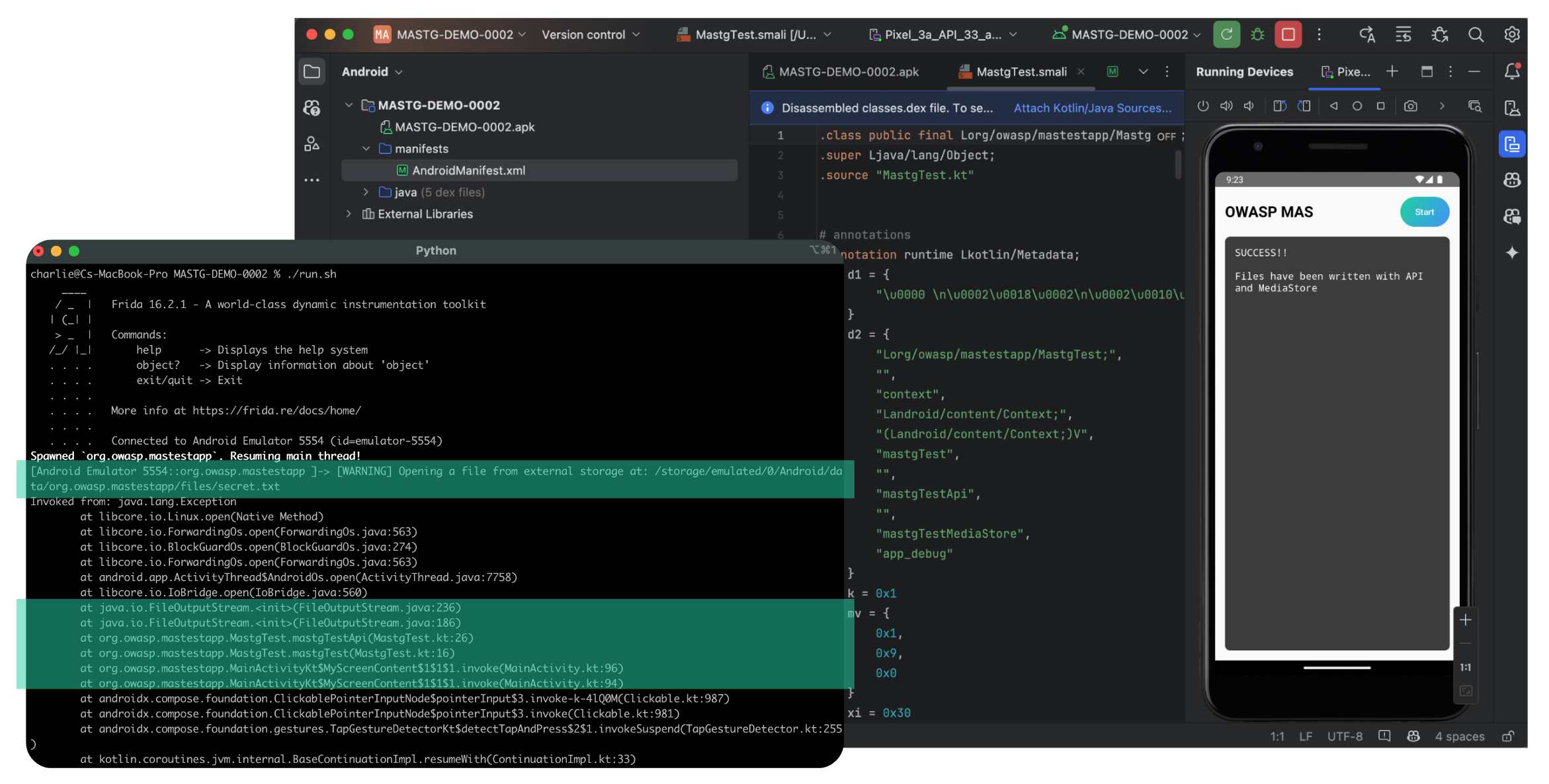

For example, to test for secure storage, MASTG-DEMO-0002 shows how to use dynamic analysis with Frida to identify the issues in the code. The demo includes:

- a Kotlin code sample (ready to be copied into the app and run on a device)

- the specific test steps for this case using Frida

- the shell script including the Frida command

- the frida script to be injected

- the output with explanations

- the final evaluation of the test

You can run everything on your own device and validate the results yourself! Just clone the repository and navigate to the demo folder, install Frida on your computer and your Android device, and follow the steps.

They are also great for advanced researchers and pentesters to quickly validate certain scenarios. For example, it's very common to find cases where Android behaves differently depending on the version or the manufacturer. With these demos, you can quickly validate if a certain issue is present on a specific device or Android version.

Automation with GitHub Actions¶

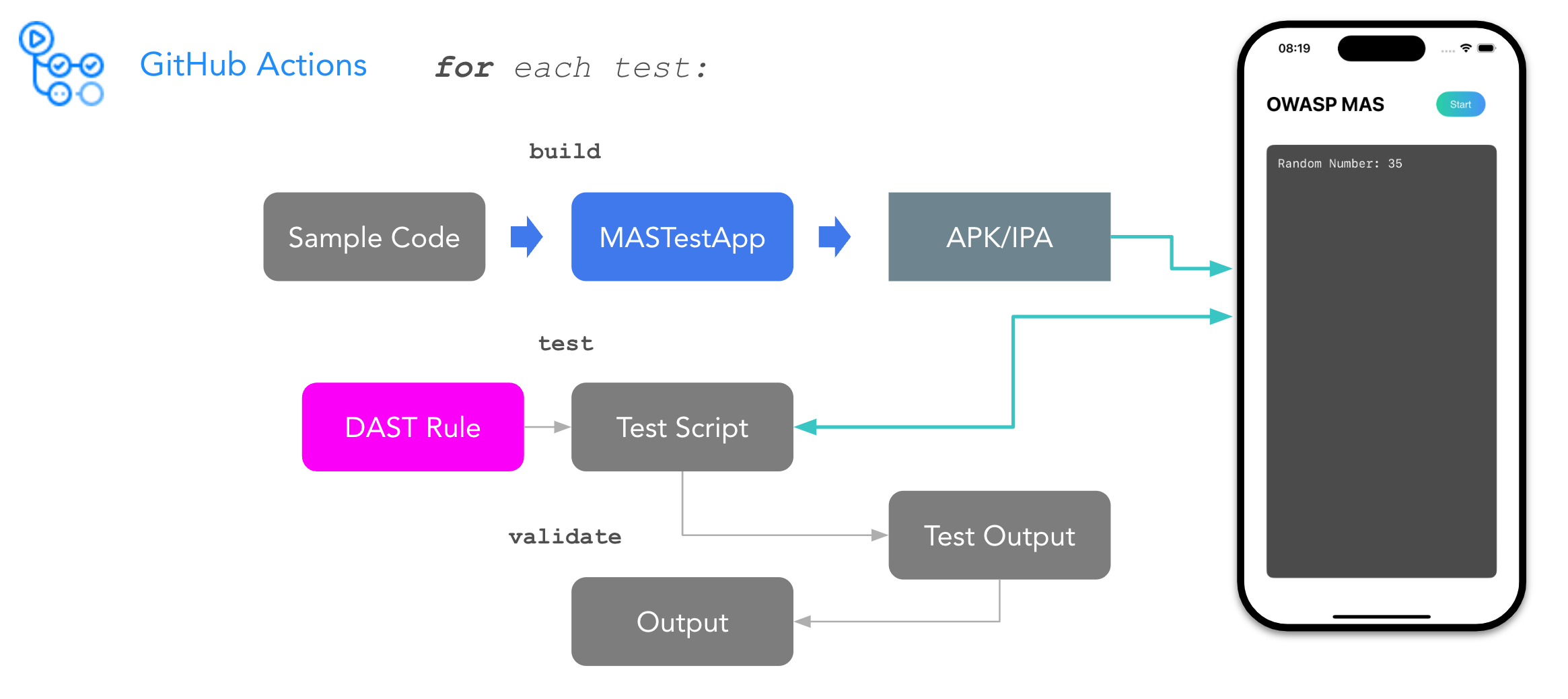

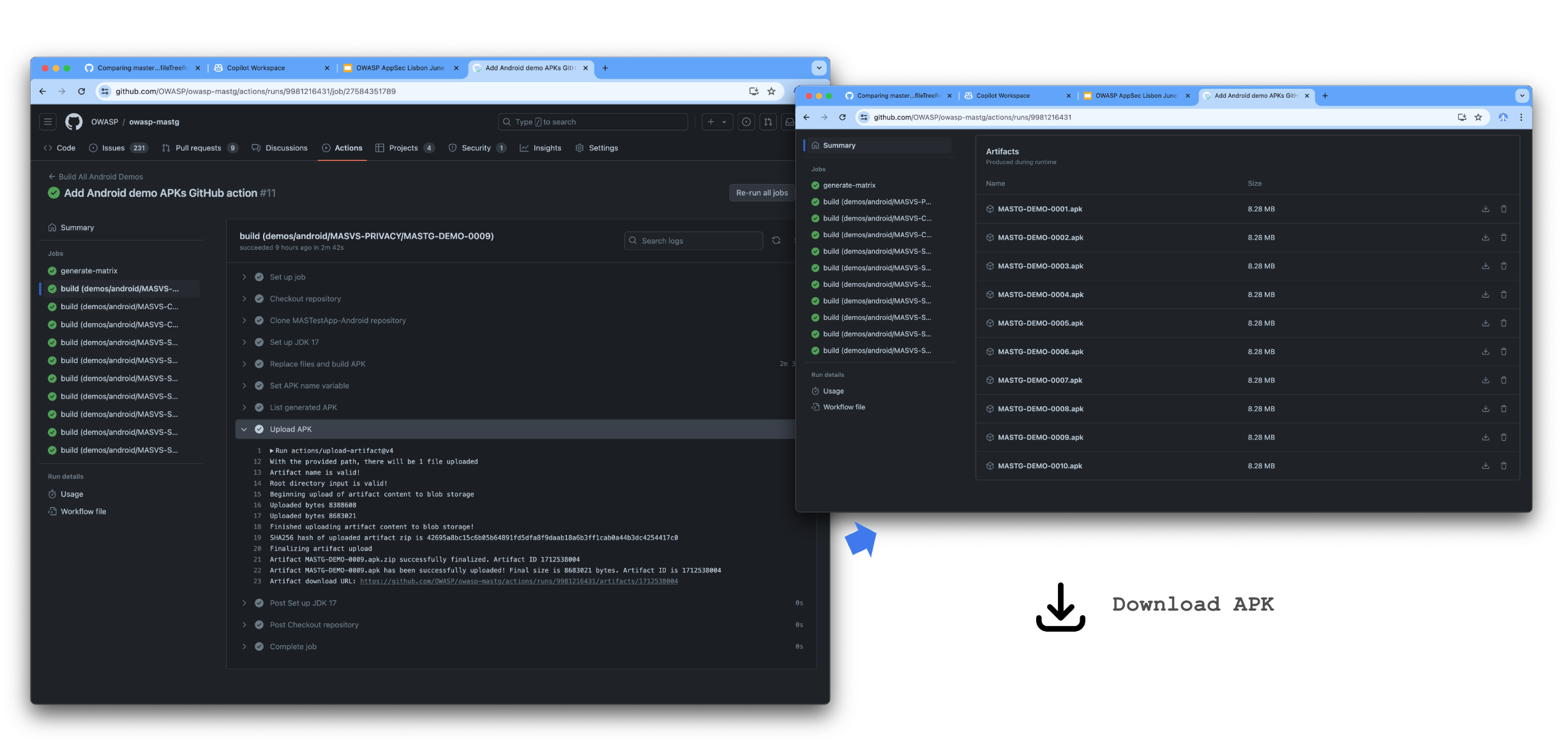

Going forward, we want to automate the process of creating and validating the new demos, and ensure that the tests remain functional over time. We'll be using GitHub Actions to do this. Here is the plan:

- Build the app: Automatically build the APK/IPA for Android and iOS.

- Deploy the app to a virtual device: install and run the generated app on a virtual device.

- Execute tests and validate results: Execute static tests with tools like semgrep or radare2 as well as dynamic tests using Frida and mitmproxy on the target device. Finally, compare the test results with the expected output.

We've currently implemented a PoC for the first step (only for Android APKs), and are working on the next steps. If you're interested in contributing to this effort, please let us know!

Feedback Wanted¶

We encourage you to explore the new MASWE, MASTG tests and MASTG demos. Your insights and experiences are invaluable to us, and we invite you to share your feedback in our GitHub discussions to help us continue to improve. This way we can ensure that our resources are practical, reliable, and valuable for real-world application.

You can also contribute to the project by creating new weaknesses, tests, techniques, tools, or demos. We welcome all contributions and feedback, and we look forward to working with you to make the MAS project the best it can be.